GALWORKS V.3.0 Modifications

T. Jarrett, IPAC

(000510); modified

Let me start the discussion by re-iterating what I think

are the most important mods/changes to GALWORKS and what

the costs may be. I'll do this in order of preference (the first

set are slam dunks). I'll also discuss proposed mods that I think

are not practical. First, refer to Roc's

2MAPPS v3.0 Design

page for muchoo relevant details on the cost/benefit scenerio of 2MAPPS V3.

GALWORKS V.3.0 Modifications

Note: numbers in red represent the final priority

(1) 1. Generate coverage maps.

Motivation: Currently it is very difficult to figure out how

much area is actually covered, due to masked pixels (generally

from bright stars). The areal coverage is crucial for statistical

purposes (e.g., source counts, angular correlations, etc).

For each coadd (per band) we blank out pixels due to a number

of factors, including (1) bad pixels, (2) scan edge, (3) bright (big)

galaxies and (4) bright stars. Bright stars are by far the

biggest contributer to lost sky. The idea is to generate an

image cube (J, H, K planes) with I*8 characters. This allows

simply integer coding (e.g., non-zero values could represent

different types of masking).

Cost: Mostly disk space (TBD). Runtime is not an issue here since GALWORKS

is already doing most of this work. I don't have an estimate of the additional

disk space yet, but I'll try to estimate some numbers before the tcon.

(final note: this is not the same

"coverage" maps as those planned for pixphot)

(2) 2. Convert "flux" (mag) limits to SNR limits.

Motivation: To employ a more natural thresholding that is

consistent with catalog generation.

Our use of mag thresholds has proven to be a nuisance,

particularly since the Catalogs are SNR limited.

This mod is mostly straight forward. The only cost is

the analysis to make sure that it is done correctly (Chester

will need to assist with these changes and testing).

(8) 3a. Improve foreground star removal.

Motivation: Improve extended source photometry in high source

density fields (e.g., the ZoA). IN addition, we also improve

the ellipse/orientation fitting, something useful for low source

density fields as well (in particular, edge-on spirals). This modification

is not strongly dependent on the lev-1 specs.

Foreground stars are both a

hinderance to photometry *and* to orientation fitting. I have developed

a method that improves this situation, particularly for high-source

density fields. See my page entitled

Improved Ellipse Fitting and Isophotal Photometry in Crowded Fields.

Our photometry in the ZoA is currently very ratty, with plenty of room

for improvement. The extended source catalogs live and die by the quality

of the photometry.

Testing: The method is mostly unproven, so a significant amount of testing

is required. Fortunately, we have the data sets to do it. Skrutskie has

kindly provided repeats of the Abell 3558 cluster. Repeatibility tests will

give a direct measure of how much improvement is to be had. We also have the

Orion-Neb repeats -- providing a set of high source density scans, to test

its feasibility in the ZoA (where the largest gains will be realized).

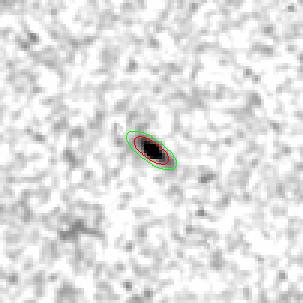

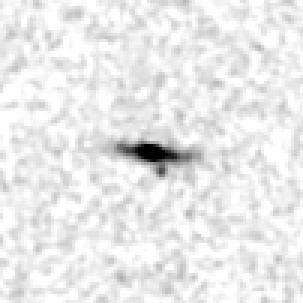

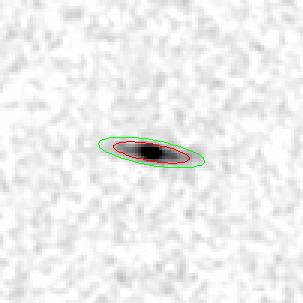

Here is an example of a typical galaxy in Abell 3558. It is 12.8 mag in K-band.

It has a contamining star nearby (along its major axis). The images show the raw

K-band field (left image), and the stars subtracted image (center) and the 3-sigma

and 1-sigma (20 mag /arcsec^2) elliptical isophotes. The plot shows the mean surface

brightness in elliptical annuli about the galaxy. The green dashed line

cooresponds to the isophotal radius (20 mag/arcsec^2). The red

dotted line is the exponential fit to the disk, with extrapolation

to eight times the disk scale length. The extrapolation results

in an additional 0.35 mags (i.e., the total flux of the galaxy

is closer to 12.5).

Cost: Runtime. The increase in runtime is unknown. The repeats tests will

provide an accurate assessment of the runtime increase. We can offset the increase

in runtime by eliminating some of the current GALWORKS functions that are

not useful (and there are plenty of columns in the 400+ columns we churn out that

can be jettisoned with only a small amount of pain).

To reiterate, I believe that resources should be focused on improving photometry.

We know that most users of the extended source catalog(s) want this as the highest priority

(with uniformity and reliability/completeness a close second).

(8) 3b. Add three more planes (J,H,K) to the postage stamp images, showing the

foreground stars removed images.

Motivation: Gives the user a "cleaned" version of the extended source from

which they may perform additional tests (e.g., photometry). More importantly,

it tells the user how well the pipeline removed the stars before photometry

measurements were made -- this is currently impossible to derive from the Catalog.

These new images will help the user

understand what was subtracted *and* how well it was done. The user may also

more easily apply tools (e.g., IRAF/STSDAS, GALPHOT) to perform photometry and

orientatin measures on the star-removed images.

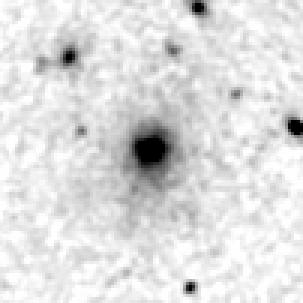

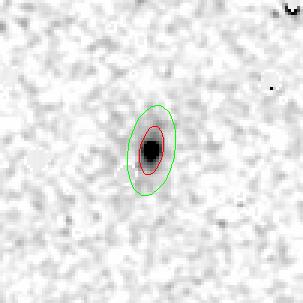

The following examples show some galaxies with their neighboring stars removed.

The images are the galaxy postage stamps. The first galaxy is a Hercules spiral

used for T-F distance calibration. The second is a bright galaxy in the Abell 3558

cluster (note the smaller galaxies around it; they do not smoothly subtract away

because they are not point sources.) The last galaxy is a spiral located within the ZoA.

The contours show the 3-sigma isophote (red) from which the elliptical orientation is

derived, and the 20 mag/arcsec^2 isophote (green), the standard photometry isophote.

Cost: Disk space. This will double the size (ouch!) of the postage stamp images.

Currently, 90% of sky complete, we are using 90 GB of disk for the postage stamps.

Hence, we will need 200 GB for this idea. But note, most of the current 90GB is

bogus -- we allow a lot of pt sources to pass into the Ext SRC DB. These bogies

are eliminated later with the Gscore. If we use the Gscore in the pipe itself

(see #7 below), we can nuke these annoying sources right off the bat. I bet we

can rid ourselves of 50 GB of false extended sources just from this obvious step.

(3) 4. Improve bright (big) galaxy processing (and removal, case being).

Motivation: We use a blunt stick on big galaxies. There is a lot of room

for improvement. Not relevant to lev-1 specs.

Currently we take a conservative approach and blank large areas around optically-big

galaxies. This works sometimes, but other times it is too conservative.

I think we can take a more positive approach and let GALWORKS process all

galaxies, big and small alike.

Implications: Big galaxies are problematic in a number of ways. The most vexing being

the background removal; that is, it is not possible to determine the background

in a coadd containing a big galaxy. So, we need to take a more robust approach from the

standard approach when we are dealing with big galaxies. I propose that we use only

one value as the representative background value for cases in which we have a big gal in

the coadds (or in adjacent coadds). We determine the background from either the median

or the mode of the pixel histogram. Here we can blank the core of the galaxy before

generating the histogram. With the background subtracted in this manner, it is a simple

matter for GALWORKS to proceed with processing the big galaxy.

Testing: Got to make sure this works. A few Messier objects should make a suitable

testing set, including M31.

Cost: Runtime again (Roc ain't gonna like this). Still, these objects are rare, so

what the heck we should go for it.

(4) 5. Tune confusion noise parameters to push deeper in the ZoA.

Motivation: Improved completeness in the ZoA. Greater glory for 2MASS.

Not relevant to lev-1 specs.

We estimated confusion noise using a model

(see

Appendix B

and

Confusion Noise vs. Density)

which is somewhat conservative. We can easily push another 0.5 mag deeper into the ZoA

(and note, using SNR limits instead of mag limits, we will be doing this more

uniformly; see #2 above). See also the empirically derived

confusion noise

coming from analysis I did

here.

Cost: Runtime and reliability. The deeper you go (particularly the ZoA), the more

stars are in your way. For this to be practical, we will need more effective

star-galaxy discrimination (see #7 below).

(9) 6. Use better "seed" galaxy catalog.

Motivation: On rare occasions big galaxies are screwed up in the pipe due to poor

input position coords. These "mistakes" are avoidable.

The input galaxy catalog tells us where the big galaxies are located,

from which we may special process or mask. Currently we are plagued by

poor coordinate positions for many of these big galaxies.

I propose that we eliminate all galaxies from the catalog that

have large position uncertainties (greater than a few arcsec).

Unfortunately, we will lose a lot of NGC and UGC gals. The good news is

the NED is constantly updating and improving positions.

Cost: Time to generate the all-sky catalog (non-trivial). Also, by eliminating

big gals with lousy positions, we are vulnerable to their "features" during

processing (e.g., they will screw up the background removal). This is probably

a minor concern, however.

(5) 7. Employ the decision tree algorithm in GALWORKS.

Motivation: Improve runtime and efficiency (particularly when the source density

is high). This is a slam dunk.

Since we have a DT that *greatly* improves reliability (at a small cost to

completeness) we might as well use it in the pipeline to help improve

the runtime. The only hard part about the DT is generating the tree itself (see below).

The DT will greatly improves matter in the ZoA, where triple stars terrorize

the processor. See the plot showing the surface density of

stars, doubles, triples and galaxies within the Milky Way (coming from the

beast.)

Cost: Time to update the DTs. This is non-trivial exercise that includes a lot

of testing and analysis to make sure the DTs are working optimally.

Also, incorporating the DTs into GALWORKS will take some time to code and test.

Although this is a hefty order, the end result should justify the coding and testing

time.

(7) 8. Compute "effective" surface brightness.

Motivation: Provide the user with a better measure of the central surface brightness.

The SB is important for both star-galaxy discrimination and galaxy morphological

classification. Our current scheme is anemic.

A lot of users are asking for a more meaningful "surface brightness" measurement.

We currently provide the peak surface brightness and the mean central (r<5") surface

brightness. The peak surf is useless and can be jettisoned.

We also provide hundreds of photometric measures, which may then be converted (by the user)

to surface brightness. What we are missing is an "effective" surface brightness; e.g.,

via a half-light radius. This issue is explored in the paper

Near-Infrared Galaxy Morphology Atlas.

See

section 5 and

Table 2

and

Figure 17.

Schneider has suggested using some kind of "Kron" aperture.

I think we can afford to add three new columns to the output (J,H,K)

Cost: 3 new columns to the output table. We can eliminate other columns to make room

for this.

(n/a) 9. Utilize prophot chi-square information.

Motivation: Improve star-galaxy discrimination (?) Jury is still out (see plots below)

The PSF chi2 may be of some use. The plots below show the CHI2 distribution for

galaxies and for known double and triple stars. Unfortunately the distributions are

about the same for the fainter sources (K > 12th mag).

(6) 10. Improve bright star masking.

Motivation: Improve completeness around bright stars with high reliability.

It looks like we will have much better integrated flux measurements for

saturated stars. This means we should be able to mask their effects better.

We need to revisit this issue.

On a related matter, I have been promised for

some time that "mapcor" will solve this problem. Mapcor must be tuned to handle

confusion noise (or equivalently, the functional form of the bright star masks must

include a source density parameter). If mapcor turns out to work for both low, moderate

and high density fields, then GALWORKS need not do this step (which would save a lot

of CPU time). The only stars, under the scenerio, that GALWORKS needs to masked are those

outside of the scan (hence, catalogued via JCAT/RAYCAT). For catalog generation, a properly

tuned "dbmapcor" should eliminate spurious sources from mega-bright stars (again, this has

*never* been realized ... so we should be prepared to deal with it ourselves).

Cost: Lots of analysis.

(1) 11. Use a priori knowledge of nightly zero points

Motivation: Improve SB measurements and photometry and other measures that rely

up the zero points.

This is straight forward. Currently we use pre-set zero points, tabulated

in the "pipe.cal" file under sdata. We need either to modify this file to include

the calibrated zero points, or a new file containing these zero points (e.g., "pipe2.cal").

12. A million other small (TBD) details.

And now for the more pie-in-the-sky proposals ...

(12) 13. Improve background removal.

Motivation: Improve photometry and reliability when the airflow is sour.

The H-band airglow is a major nuisance for background removal. We usually

are successful in removing this stuff. But we also fail on occasions.

Airglow can bump up the background resulting in spurious detections and

over-estimated flux. Schneider has proposed that we attempt higher-order

fits to remove these "bumps" and ridges. I am of the opinion that this is

not robust. We will be fitting out real galaxies -- throwing out the baby

with the bath water. The other idea Steve has is to

use the frames and not the coadds to remove the background. As Steve points

out, this is the most optimal approach for removing airglow. However, it

is not practical (the impact to pixphot and galworks is major).

Cost: Increase in runtime and a lot of testing & development. Even more fatal:

the issue probably cannot be solved.

(9 ?) 14. Improve star-galaxy discrimination for the ZoA (beyond DT mod; see #7 above)

Motivation: Triple+++ stars have overun the GC.

Triple stars have a distinct signature. There has got to be a robust way to

identify these sources in the ZoA.

Cost: Runtime no doubt. Also, more importantly, development time since we don't

know how to do this. At one point Schneider (or Huchra?) was going to put together

a grad student think-tank to crack this nut. Nut still intact.

(11) 15. De-blend close-pair galaxies

Motivation: Improve photometry around these interesting objects.

In principle this is possible. I developed a method to do just this.

The problems are large, however, both runtime and robustness are questionable.

Cost: Runtime (this requires an iterative, >2 pass, approach) and development. The cost/benefit

ratio is up there.

(10) 16. Allow LCSB detection in the ZoA.

Motivation: Improve completeness for LSBs.

By simply tuning some parameters we can let the LCSB processor work deeper in the galactic

plane.

Cost: Runtime. Also, the output is sure to be mostly garbage.

On a related matter, we can also try new methods to detect LCSB galaxies, in particular, the

CHI^2 image method of Szalay. This method looks very promising. But we the develepment time will

be steep.

Response from Steve Schneider and my subsequent reply:

"Stephen E. Schneider" wrote:

> Hi Tom,

>

> Overall, I like your suggestions. I have a specific comments on a few of

> your recommendations:

>

> 1. I think the coverage maps are essential. I'd like to see the images a bit

> bigger too, if that's possible.

The coverage maps I'm referring to will be coadd sized (512X1024).

Is that what you mean?

>

>

> 2. I'm not sure I understand the distinction between flux and SNR limits

> you're making here. Can you explain it more?

We use mag thresholds to set the flux lower limits (faintness) that

we work. We throttle these back by the confusion noise equivalent.

INstead of mag thresholds, we should work with SNR thresholds, which

corrects for variable backgrounds (i.e., backgorund noise that is bobbing up

and down).

>

>

> I'd also like to see an improvement in the isophotal levels being used. In

> the current version I believe we use instrumental magnitudes for some of

> the calculations (like isophotal size). That can be corrected now that

> we have the calibration information available.

You are correct. I forgot about this detail (and others as well).

We can certainly use the correct zero-points. Roc promises me that

they will be available for the re-processing.

>

>

> 4. As we discussed last week, the big galaxy problem may require parallel

> approaches--doing what you say, but also feeding in the positions of known

> sources and "forcing" the pipeline to make measurements within the given

> aperture.

Can do. What kind of catalog would this be?

Hand generated? Or just the NED catalog of big bright galaxies

(like M31).

>

>

> 6. Rather than eliminating any big galaxies with poor coordinates, I

> would like to hire an undergraduate to check the positions. I've done

> it already with planetary nebulae, and I think it can be done reasonably

> quickly for the 10,000 biggest galaxies with poor coordinates.

Gotta get moving on this ... running out of time. I would luv this catalog.

>

>

> I'm also wondering about other issues, like handling the jumps in background

> level across 1/6th frame edges and due to electronic noise. I'll dig out my

> notes from our long-ago meetings and think more about this.

Aha, I have completely forgotten about the J-banding (mainly because the

problem has dissappeared). Well, it is nasty all right. I shutter to think

how

we can solve this problem (other than re-observing the scans that are

effected).

>

>

> ---Steve

-tj

A Note on Testing

A number of repeated scans of Abell 3558 have been acquired and

reduced. They include the following scans:

980319s, 87-94, 101-105

980328s, 88-94

000406s, 54 - 58

000407s, 55-58

000408s, 48, 55-58

000411s, 55-59

000412s, 55-57

000419s, 44-48

000421s, 41-45

000422s, 42-46

000423s, 41-45

000424s, 42-46

000425s, 42-46

000428s, 49-50, 57-58

I am now performing repeatibility tests with the current (v2.?) GALWORKS

results. This data will also be used to test V3 mods.

Additional repeat data exists for one scan through Orion

and dozens of repeat scans through the ChamI dark cloud.